This is Shigeru Shiosawa from the Advanced Technology Lab.

This time, I’d like to introduce a method of automatically detecting people and vehicles in real-time with footage captured from various floors of buildings or from flying drones.

*The initiative started over a year ago, but getting it into an article took some time due to patent related concerns.

At the start of this initiative, we had a very hard time collecting images overlooking people from around 20 to 40 meters in the air.

Because of this, we are generating large amounts of artificial training data using 3DCG technologies and deep learning technologies.

What follows is a rough idea of how everything works.

1. Generating base training data using 3DCG

Generated over 10,000 rendered images of people automatically with different angles, races, clothes, and poses due to modeling data and camera parameter settings

2. Propagation of training data using deep learning

Propagate variations of 1 image 46x due to GAN (Generative Adversarial Network)

3. Detecting people and vehicles with deep learning

Training and image analysis of data from 2. using YOLO (You Look Only Once)

Now, let’s take a closer look at each one of these steps.

1. Generating base training data using 3DCG

In order to generate training data without using any “real-world” pictures, human-like polygons were rendered using 3D computer graphics. For this, we used modeling data of foreigners that we were able to get from a resource. We used this data with the expectation that no problems would occur, as a distance of around 40 m from the skies and following processes cause Japanese and foreign people to lose any significant differences.

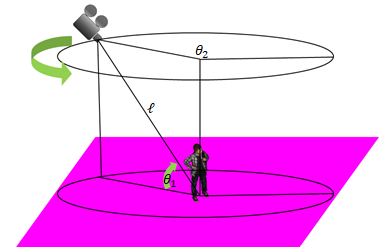

As it were, we rendered each pose while randomly changing the camera position and setting a limited angle (20 to 80 degrees for θ1) to make it look as though the images were taken from a drone in the sky.

[Result image of human-type polygon rendering]

An image rendered in 3DCG modeling a person photographed from the sky.

This is used as input data for GAN. We used the same background color in order to optimize the following processes.

2. Propagation of training data using deep learning

In order to have some diversity in poses, clothing, and with skin and hair colors, we generated 460,000 items of training data using a General Adversarial Network. While many people may already know, GAN is a technology that was widely known as the AI that painted a painting with a high bid at the British auction Sotheby’s at the end of 2018.

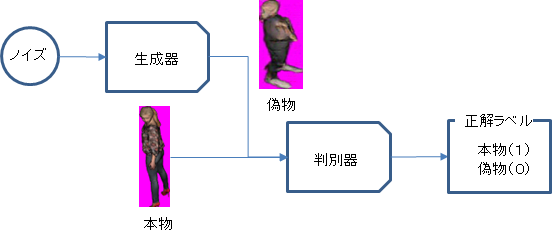

Overview of GAN

For our project, we pushed training for 2 models simultaneously, and had them compete with each other automatically.

• Generator: Has the objective of increasing the probability that the discriminator recognizes a sample in error (rendered image from OpenGL) using random noise as an input.

• Discriminator: Has the objective of correctly discriminating a sample being generated and an output image from the generator, discriminating while learning.

[Generation training data]

An image obtained with an input of the human-type polygon rendered results to the GAN.

This is used as learning training data for the CNN.

3. Detecting people and vehicles with deep learning

A model trained by a convolutional neural network.

Existing image recognition algorithms “DPM” and “R-CNN” have independent image area estimation and classification, have complex processes, and tended to take long to process. With this research, we applied the concept of regression problems to image recognition, and implemented “YOLO,” which is able to perform “image area estimation” and “classification” simultaneously. Please see the YOLO Website for details.

This is the sample image where people were finally detected from the sky through this process.

Please understand that we used an image from a high building we had coincidentally that showing more vehicles than people rather than an actual image from a drone due to concerns for personal information protection and confidential information protection.

https://youtu.be/Fm_dUJmJdqA

By using this scheme, training data images that are hard to obtain and images that do not exist can be generated in large quantities. This can be used, for example, to create 3DCG data of a person drowning to develop an AI model that can be used in rescue AI.

This is a great scheme for people who have given up on their projects due to little training data!