Hello, this is Shige Shiozawa from the ATL.

Let me introduce you to a demonstrative app where I worked on the R&D.

This is an object recognition app that uses the “YOLO: Real-Time Object Detection” library, which is a bit of a niche in the AI field.

The characteristic of YOLO is an R-CNN that is able to detect an object in a video or still image, and identifies the detected coordinate information and size.

When you’re shopping, have you ever wanted to know what the total price of goods are in your cart/basket before going to the register, or wanted to know if the goods you’re buying are sold at a cheaper price at a nearby store? For me, I find myself adding up prices from store signage using manual input and Googling to find fliers for nearby stores to see if they’re selling the things I’m buying at a lower price.

This is when I made “PicSum,” the app that can calculate and look things up to perform these annoying tasks in an instant.

■Introduction of features

Display total price of the cart and low-price information of nearby stores

Let’s test this out by assuming that we need the ingredients to make omelets.

<1> Launching the “PicSum” app

App icon

<2> Select shopping store from map

Select the store where you will go shopping from the pins on the map.

Store selection

<3> Place the shopping cart/basket under the camera

Product recognition is initiated. Continue to hold the camera until all products are recognized.

Start product recognition

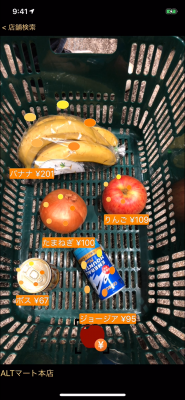

<4> Product names and prices displayed

When the products are recognized, round specks attach themselves to the products, and the product names and prices are displayed in labels.

When it is finished recognizing all products, touch the apple icon.

Product recognition complete

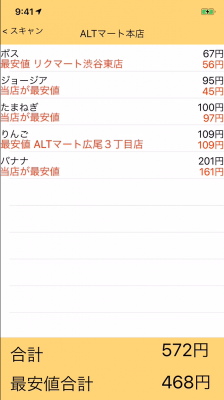

<5> Displaying total price and cheapest price information from nearby stores

It displays the current total price of products in the cart/basket and cheapest price information from nearby stores.

Omelet total price screen

■Accuracy of object recognition

Test for correct differentiation of products with similar packages and shapes

Similar to above, launch “PicSum” and show the cart/basket to the camera.

This time, we have some objects with similar shapes, an “apple” and “onion,” and 2 types of canned coffee with the same shape but different labels (Georgia, Boss).

Furthermore, the Boss coffee is upright and the Georgia coffee is on its side.

Confirming object recognition accuracy

It was able to differentiate “apple,” “onion,” “Georgia,” and “Boss” without a problem.

In particular, the Boss coffee was upright, and only a portion of the label could be seen in the view, but it was still able to make the distinction.

■Training data preparation

Created training data for each product with the following photography methods

<1> Photographed each product with a green screen as a chroma key (around 100 pictures for each product)

Apple against green screen

<2> Photographs without backgrounds

The green portions were removed.

Apple without a background

<3> Generation and background composition

Generation for deep learning training was set to 100 times, and images were composed with various backgrounds so there could be some variation in the background.

■Environment

Development hardware and training environment

<Development hardware>

CPU: Core i7-8700

RAM: DDR4 2666 16 GB x 4

GPU: NVIDIA GeForce GTX 1080 Ti x 2

SSD: M2. 2TB

<Training environment>

OS: Ubuntu

Library: YOLO V3

Verification data: 10% of the number of training images

EPOCH: 10

<Client environment>

Smartphone: iPhone X

OS: iOS ver. 12.1

■Summary

Since this R&D had a development time of 1 month, only the bare minimum of functions, data preparations, and training were performed.

Because of this, the products in the shopping basket had to be placed so they were not on top of each other, product recognition could take several seconds, and the iOS camera would heat up, placing this in the alpha to beta stage. The most difficult thing was the large amount of time taken to photograph 10s of different types of products manually, even though they were first photographed with a green screen to make background elimination easier. In addition, there was some difficulty due to some food items that degraded quickly, such as bananas that had to be re-purchased every week. (The food items were not thrown away, but taken home by members of the team)

I felt that these functions would be convenient if we were able to increase the quality to a state that can be released. There is the question of obtaining lowest price information from nearby stores, since product information from all stores change very rapidly. There may need to be consideration to link with a database that is connected with store registers, but this is definitely a function I would like to see in the future. I hope to use deep learning to aim for a world where things that were difficult to implement are just that much better, and I will continue my R&D.