This is Shigeru Shiosawa from the Advanced Technology Lab.

As the cashless movement is spreading quickly throughout the world, retail tech that bring innovations to retailers catching the spotlight, largely represented by the likes of Amazon Go. Current retail tech performs analyses with in-store information, mainly with customer attributes, behavioral history, customer leads, and product discrimination.

Normally, image analysis and AI is used these processes and analyses with expensive GPUs. The reality is that stores like Amazon and large manufacturers that have ample capital and resources are at the leading edge, but the barrier to entry remains high for small scale stores.

Our team has long been putting in the research on ways to implement retail tech with a little cost as possible. For example, an analytical method combining the NVIDIA Jetson Nano* (a sale price of just over 10,000 JPY), a camera module, and deep learning technologies.

*An introduction of the NVIDIA Jetson nano is at the bottom of the page

This blog will introduce this initiative in 2 parts. We feel that we need to do anything we can to introduce IT in the retail industry and help move things forward to cashless.

Please note that the experiment location of the following demonstratives experiments is in our office.

• Estimated attributes

We implemented attribute estimation from camera footage using the Jetson Nano.

Here, we are estimating the “gender” and “age” of the people in the photograph.

There are many Web services (Web-API) to determine gender and age, with the Microsoft Azure “Face API” being well-known. We can assume that it is used in many locations, as it has a relatively high accuracy and is easy to connect with the Web.

At first, we considered using a Web-API for attribute estimation for our tests. When using an external API, the process can be completed with just a photograph of a face being uploaded to the API, and can be executed relatively easily with something like the Raspberry Pi. However, we attempted attribute estimation with a single unit with hopes of putting the performance of the Jetson Nano to good use.

[Flow of tests]

• Face detection (Independent program)

↓

• Execute attribute estimation (attribute estimation with DeepLearning: use with no model changes)

We used a structure based on an OpenCV developed for a different purpose for the face detection program, and used age-gender-estimation (https://github.com/yu4u/age-gender-estimation) for attribute estimation. While age-gender-estimation performs attribute estimation using models that are DL, we were able to find that we could use the Jetson Nano to execute attribute estimations as a result of testing, finding an advantage that an internet connection was unnecessary.

Below is what the device looks like installed.

⟨⟨ Camera installed at ATL reception ⟩⟩

First, a USB Web camera is installed in a conspicuous manner underneath a tablet on a reception table. The Jetson Nano was installed on the rear side of the reception counter. We tested to see if we could recognize the faces and estimate attributes of people that come in through the entrance to visit (mostly employees) and people that used the reception tablet.

Results of obtains images and determined ages and genders

⟨⟨ Results of age and gender estimation ⟩⟩

While the 2nd photograph is blurry, age and gender are still recognized.

Points of innovation:

• Performed scoring of face detection function and tried to obtain superior images of faces

• Estimated attributes using the best facial images (maximum of 2), and used an average, reducing any measurement errors.

The range we saw during testing showed that while individual differences and lighting had a large effect, there was a age measurement error of around plus or minus 3 to 5 years. We were not able to perform testing with a wide range of ages with this test, however, we may be able to produce more accuracy than the age range at the convenience store register.

When comparing with the Microsoft Face API, we can assume that our accuracy is lower with both attributes and age. However, we believe that DL models plays a part in this. Taking into consideration that the models used by default (data used in age-gender-estimation) has few Japanese faces, we believe that we need to add many images of Japanese people in the training data to reflect in the models if we were to aim for improving accuracy.

The method described so far does not require a continuous internet connection, and is not limited to the use of APIs. We believe that a single unit costing a little above 10,000 JPY that can produce these aggregates just by being placed somewhere can be useful for data analysis in the retail industry.

• Line of sight detection function

This is a function of understanding which products customers are interested in on product shelves, and obtaining data to perform product display optimization.

We performed this experiment at the refrigerator in the office selling drinks. We captured and quantified where people’s line of sight were on the shelf when they made a purchase. We can display the obtained line of sight data graphically for analysis.

[Technologies and devices used]

• Line of sight tracking library: OpenFace (https://github.com/TadasBaltrusaitis/OpenFace)

• Camera: ELECOM UCAM-DLE300T

[Use of OpenFace]

We decided to use OpenFace for this experiment, which has line of sight detection functions. While we needed to build from the source for use by the Jetson Nano, we were able to build it comparatively easily as the build script (install.sh) was included.

For this test, we moved forward with setup using install.sh as OpenCV was included, which was not included beforehand.

The following are changes we made to the script.

Change in OpenCV version: 3.6.0 to 3.6.1

Change in OpenCV options: WITH_CUDA=ON

Change in dlib version: 19.13 to 19.16

In addition, as the Jetson uses an ARM architecture, the “-msse” option in CMakeLists.txt must be disabled. During the build, any non-included libraries or header files that become required need to be introduced in order by apt, etc.

When the build is completed OpenFace function including the GUI mode can be used. Points of caution for a build is to allow for a fairly long time, and to maximize performance by using “$ sudo nvpmodel -m 0” if possible. The CPU is in a continuous high load during the build, and can freeze without proper caution towards temperature or electricity source. If possible, install a heat sink fan (or ventilate externally) and use the 5 V/4 A jack (not microUSB) for power source to be on the safe side.

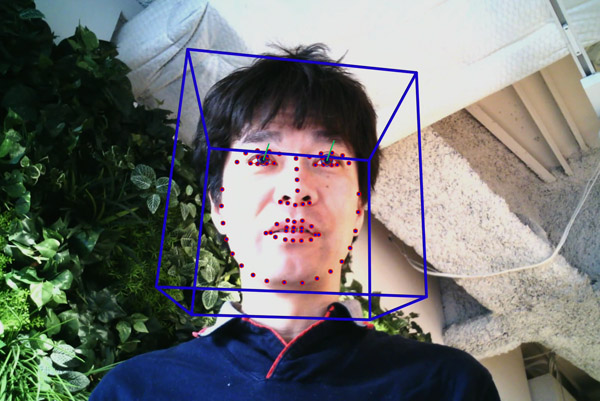

⟨⟨ OpenFace Gaze GUI display ⟩⟩

⟨⟨ OpenFace GUI numeric display: Win ⟩⟩

The figure shows OpenFace in GUI mode. As shown, various information can be obtained, such as the angle and direction of the face, and the line of sight. Other than still images and video, information from real time cameras can be used as inputs. A point of caution is that as faces and lines of sight move more frequently than expected, the FPS of video or cameras becomes important when trying to obtain detailed information.

Although its proper strengths may be demonstrated if OpenFace was optimized for the Jetson Nano, we were not able to produce as high of a frame rate as we thought with a real time camera input under default settings. (The camera performed at 7 FPS) Because of this, we used recorded (ffmpeg + v4I2) data for this test wijth teh Jetson Nano to perform our line of sight analyses. As normal recordings can produce video close to 30 FPS at 720p, and as video analysis is supported, line of sight visualization in batch-like processes becomes possible. In addition, when considering use under real conditions, if line of sight movement for each unit customer that came to make a purchase (per each person, for example) can be visualized, their product selection can be analyzed from the “line of sight” point of view, satisfying the requirements.

• Flow of testing

(1) Video recording

↓

(2) Analysis by OpenFace

↓

(3) Plot display

(*All executed by the Jetson Nano)

This test and its results are shown below.

⟨⟨ Installing a camera in a refrigerator ⟩⟩

⟨⟨ Line of sight aggregated results ⟩⟩

Our image aggregation method was to use the detection results of each frame unit output by OpenFace (.csv file) and made a plot using a Python script.

In the test, we had the testers move their line of sight from the left to right of the 1st shelf, from the right to left of the 2nd shelf, and look to the 4th shelf, and then look at the very top shelf again.

Each of the points show the passage of time by going from light blue to purple. We were able to confirm a certain amount of line of site movement detection from the left end of the 1st shelf.

Therefore, analysis can be performed, for example, on the amount of hesitation between 2 products A and B, the purchasing process due to product positions, and changes in purchased products.

[Challenges]

We found that we were not able to use the full performance of the Jetson Nano when using OpenFace under default settings. Because of this, we believe that some tuning is needed in order to obtain highly accurate real time data. Assuming an improvement in real time application, the system could be configured in a simpler way, which will not only aggregate line of sight data, but may enable some kind of action to be taken when a customer is hesitating on a purchase. An example of a method to consider is a case where a customer is hesitating between beverages from company A and company B. At that time, a display could advertise the beverage from company A in conjunction with a campaign with company A.

[Summary]

The Jetson Nano is able to use the Nvidia Cuda in a Linux environment with based on Ubuntu. Although there may be some limitations with memory, etc., if requirements are met, Cuda based DL models being developed on the existing desktop can be used. The amazing thing is that this is an operational device the size of the palm of your hand! These points and more will help to spread the possibilities of things that have yet to come.

Coming next is another spotlight on retail tech related testing using the Jetson Nano, stay tuned!

• About the Jetson Nano

It is a platform developed by NVIDIA for embedded applications. It is a module that is able to execute AI and deep learning with a small size and low power, which allows for many ideas to use it in various scenes.

The Jetson family included the 2 types “Jetson AGX Xavier” and the “Jetson TX1/TX2,” and now in March of this year, the new “Jetson Nano” was announced in the US. This will be the smallest and have the lowest price in the Jetson family.

I’ve been able to obtain the Developer Kit for this “Jetson Nano,” and now would like to review it while writing about everything I tried.

• Jetson Nano Developer Kit

The Jetson Nano Developer Kit is a kit where a developmental board (motherboard) equipped with interfaces is attached to the “Jetson Nano” module. It can be purchase for around 12,000 yen in Japan.

The thickness of the Jetson Nano module itself consists mostly of a heatsink, with a thickness of 20 mm with that included. The footprint of just the circuit board is 70 x 45 mm, just 60% the size of a business card. Sadly, the core unit cannot be operated standalone at the current time, and can be used by attaching it to a developmental motherboard, equipped with interfaces like USB, HDMI, and GPIO, like you would with RAM in a laptop.

The biggest feature of the Jetson is that it includes a GPGPU, which is basically essential in the AI and particularly in the deep learning fields. It is equipped with 128 CUDA cores using the NVIDIA Maxwell architecture, although that is a bit of an older generation. It can be disheartening to know that the latest consumer GPUs are equipped with over 4,000 CUDA cores, but there are some extremely beneficial points of having a framework that uses CUDA in this size, and something that only takes 10 W of power. At the point of the setup image of the OS as mentioned later on, the CUDA library is already loaded, and the GPU versions of TensorFlow-GPU and OpenCV can be used.

Please see the official site for detailed specifications.

Nvidia Jetson Nano

• Exterior

⟨⟨ Jetson Nano Exterior ⟩⟩

The exterior of the Jetson Nano looks like this.

As you can see in the picture, the module is equipped on the developmental board, and you can also see a gigantic heat sink.

Also, many have alluded to the fact that the reverse side of the board under the USB part shows a chip type condenser sticking out, which looks as though it was put there after the fact. It looks like getting this stuck on a corner or a desk or dropping the unit on this part might just break it. With that and having to develop using a bare circuit board, I used pieces of plastic to insulate the reverse side as a way to prevent shorts.

⟨⟨ Jetson Nano reverse side ⟩⟩

As far as placement of the board, the cardboard box the unit is the official stand for the unit. (Make sure not to throw it away)

• Comparisons with the Raspberry Pi 3 Model B+

Before getting down to business with the Jetson Nano, the overall size of the interfaces pushed me to make a simple comparison with its somewhat rivaling CPU board for embedding, the Raspberry pi.

⟨⟨ Comparisons with the Raspberry pi 3 B+ ⟩⟩

First, lets look at the size. I compared the developmental board with the Jetson Nano core board attached, and the Raspberry Pi 3 Model B+ (just called the Raspberry Pi from now). The Jetson Nano developmental board is 100 x 80 mm, and the Raspberry Pi is 86 x 56 mm. With the Nano including some real thickness, it actually feels around twice as large as the Raspberry Pi.

Now for the specs. I only want to compare the big picture items, so please see the official websites for all the details.

Differences in the interfaces are as follows.

⋅ Wireless environment: Jetson Nano = none, Raspberry Pi = WiFi + BT

⋅ USB: Jetson Nano = Ver 3.0 x 4 ports, Raspberry Pi = Ver. 2.0 x 4 ports

⋅ M.2: Jetson Nano = Yes, Type E, Raspberry Pi = no

⋅ External power connector: Jetson Nano = yes, Raspberry Pi = no

I did think that it would be convenient for the Jetson Nano developmental board to have WiFi, but you can use WiFi with it just by attaching a USB WiFi dongle bought for around 1,000 yen. Also, although it is unconfirmed, the M.2 (Type E) equipped on the Jetson Nano developmental board looks like you might be able to attach a WiFi-BT board like Intel’s Wireless-AC type? That wouldn’t take up any USB ports, and would keep it nice and slim.

One interesting point is that the Jetson Nano is equipped with an external power connector as standard. This signifies that it has greater power consumption (too much for USB to handle). Actually, it is also possible to supply power through Micro USB, but that is limited to around 5 V/2 A (10 W).

You can check the current performance mode by using the command “$ sudo nvpmodel -q,” and if you want to use maximum power, using the command “$ sudo nvpmodel -m 0,” an external power supply (5 V/4 A) is recommended.

On the other hand, there are some interfaces that are similar or the same. The GPIO connector is mostly the same as the one on the Raspberry Pi, and something called a CSI connector is also equipped on the Jetson Nano developmental board, which allows a Raspberry Pi camera to be connected as-is. This is likely a way to prod users of the Raspberry Pi to use the Jetson Nano, but it is a major advantage of being able to share developmental assets.

• Setup

Next, I’ll talk about the setup for the Jetson Nano. For the most part, following the official guidelines by using a MicroSD card will allow you to put on an OS with no problems. While another family of products called the Jetson TX1 requires a standalone Linux machine, the Nano can be booted from a MicroSD card, allowing it to be used by itself.

Getting Started With Jetson Nano Developer Kit

The standard OS for setup is desktop edition of Linux based on Ubuntu 18.04. As the CPU is ARM based, some modules and drivers may need to be built up, but most general utilities can be obtained through apt-get, and can be used the same way as any normal Ubuntu Linux.

However, comparing with normal PC specs, and with an effect from all storage coming from a MicroSD card, the speed and response times are overall a bit slower. Since copying files and building up the I/O seemed a little sluggish, I used the site below to change over to high-speed USB memory. (Sadly, the unit can’t operate without the MicroUSB)

(Reference website: Jetson Nano – Run on USB Drive)

If using a MicroSD card, using MicroSD cards that can support the Application Performance Class such as “A1” and “A2” would work well, as they are high-speed and close to random access speeds.

• Power

The Jetson Nano can be powered off of micro USB. However, like the Raspberry Pi, it can take a fair amount of power, so a smartphone charger many not make the cut. It would be ideal to use something like a Raspberry Pi power brick (over 3 A if possible) at the least, and for the Jetson Nano, an external power connector that can go up to 5 V x 4 A is included. It looks like it would be best to use the external power connector to operate it stably.

• Fan

Although a gigantic heatsink is already equipped, a 4 cm cooling fan can also be attached. I saw in some demonstrations that the fanless CPU temperature get close to 80 °C, but as the unit can become unstable during high-load operations and builds in high temperatures, using attachment fans or desktop fans is recommended. (The fan has a huge effect)

⟨⟨ Jetson Nano + 6 cm Fan ⟩⟩

• Camera

As for cameras that can be used by the Jetson, most Webcams in the UVC standard can be used without any problems.

(Positive tests: ELECOM UCAM-DLE300T, Buffalo BSWHD06M)

Also, like I mentioned before, as the Raspberry Pi camera also has a CSI connector, it can be used with the unit without any real setup. Although it may depend on the quality of the webcam, the CSI connector introduced less lag in the image when connected through OpenCV.